In a world increasingly reliant on visual cues, artificial intelligence (AI) has emerged as a revolutionary force, particularly for those who are blind or visually impaired. With its ability to interpret data, recognize objects, and provide real-time assistance, AI is bridging gaps that were once insurmountable. This technological shift has empowered the blind community to lead more independent, fulfilling lives, where daily tasks—such as reading, navigating, and social interaction—are now more accessible than ever. From object recognition tools to personal assistants, AI is reshaping how blind people engage with their environment.

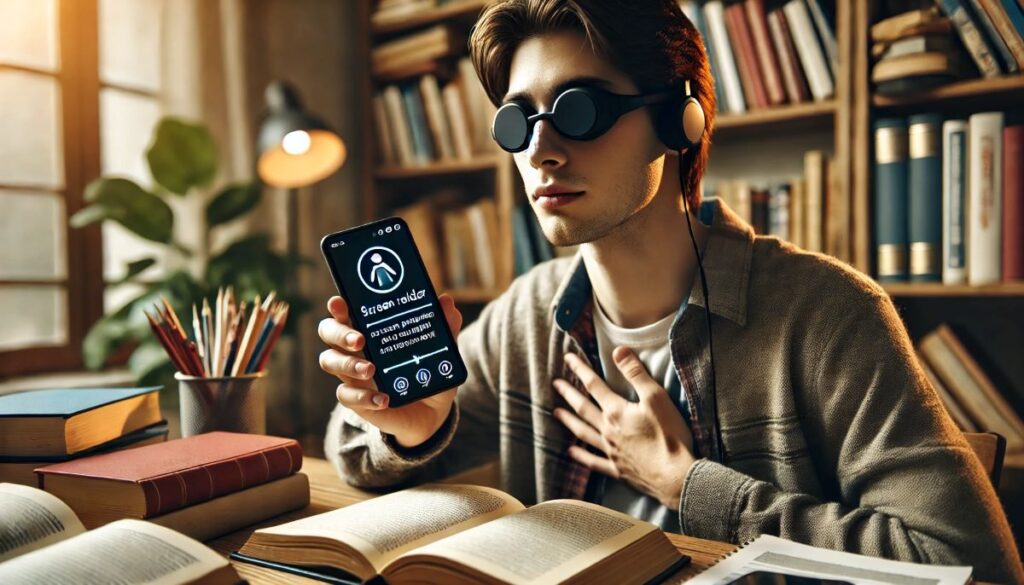

As the world continues to innovate, AI’s role in accessibility grows more sophisticated. Tools like ChatGPT, Seeing AI, and Be My AI offer unique advantages, from describing images and reading texts aloud to helping users converse more naturally with technology. These advancements go beyond simple convenience; they are essential to fostering a more inclusive society, where visual limitations no longer dictate access to information or participation. In this post, we will discuss how AI is transforming everyday life for blind individuals, offering greater autonomy and a world of opportunities.

AI-Powered Object Recognition and Navigation

Navigating the world without sight has always been a major challenge for blind individuals, but AI-powered object recognition tools have brought about a profound transformation in this area. Apps like Seeing AI and Be My AI are pivotal in these changes. These applications use the device’s camera to identify objects, people, and text in real-time. For instance, Seeing AI can read printed materials, describe scenes, and even recognize faces—all through simple audio feedback. This enables blind users to perform everyday tasks like grocery shopping, crossing streets, or finding specific items in a room with far greater ease.

Wearable AI devices, such as Meta’s Orion AI-enabled smart glasses, are another game changer. Equipped with advanced cameras and sensors, these glasses can detect obstacles, read street signs, and provide step-by-step navigational guidance. Devices like these essentially function as a second set of eyes, offering rich, real-time descriptions of a person’s surroundings. Additionally, tools like Picture Smart AI, integrated into screen readers like JAWS, take this to the next level by offering even more context about images, whether online or in documents. This not only makes independent navigation more accessible but also provides a more detailed understanding of the environment.

Another important aspect is AI’s growing role in enhancing navigation for the visually impaired, with technologies like smart canes and AI-driven GPS systems. For example, certain navigation apps now integrate AI to offer detailed descriptions of nearby buildings, traffic signals, or even people. These tools provide essential guidance in urban settings, enabling blind individuals to move confidently through busy intersections or crowded spaces. The blend of AI-powered apps and wearables allows users to enjoy greater independence while feeling safer and more empowered in unfamiliar environments.

Reading and Accessing Information

The printed word, which has long been a barrier for blind individuals, is now more accessible than ever thanks to AI. Tools like Optical Character Recognition (OCR) combined with AI have opened doors to vast amounts of information previously inaccessible. Apps such as Seeing AI and Be My AI can read text on paper, from books to restaurant menus, and even handwritten notes. This functionality turns printed material into spoken words in real-time, providing immediate access to content. Whether it’s reading a product label in the store or going through daily mail, these apps make everyday tasks much simpler for the blind community.

In addition to OCR, personal assistants like ChatGPT and Gemini have further revolutionized how blind people interact with the digital world. They can be used as a conversational companion, offering detailed explanations, answering questions, and even assisting with tasks like writing or problem-solving. Unlike traditional voice assistants, those AI chatbots engage in more nuanced, context-aware dialogues. Blind individuals can use them for everything from brainstorming ideas to getting clarification on complex topics. Their ability to break down visual data and describe complex images in simple terms adds another layer of accessibility for blind users in education, work, and everyday life.

Alt-text descriptions in online content have also significantly improved thanks to AI. Social media platforms like Facebook and Instagram now utilize AI to auto-generate alt-text, providing a description of images for blind users. Tools like Picture Smart AI go even further, analyzing the elements within an image and delivering more context. By offering robust, meaningful descriptions of visual content, AI ensures that blind individuals are no longer left out of conversations that rely on images. Whether it’s identifying a person in a photo or understanding the content of a meme, AI-powered alt-text allows for fuller participation in the digital world.

Personal Assistance and Home Management

AI is not only transforming how blind people navigate or access information—it’s also revolutionizing home management. Smart home devices, powered by AI, allow blind individuals to control their living spaces with greater independence. With tools like Alexa and Google Assistant, users can control lights, locks, thermostats, and even kitchen appliances through voice commands alone. Tasks like adjusting the room temperature or turning off the lights before bed no longer require physical access to switches or control panels, offering both convenience and safety.

AI-driven personal assistants are becoming increasingly adaptive, learning individual preferences and tailoring their responses accordingly. For instance, assistants can help schedule appointments, set medication reminders, or send voice-activated messages. Some personal assistants can even identify items through object recognition—perfect for managing tasks in the kitchen or around the house. These technologies ensure that blind individuals have more autonomy, reducing the reliance on others for simple day-to-day tasks.

Smart speakers and AI-enabled devices, such as robot vacuums, have also made household chores easier to manage. Whether it’s cleaning floors, managing groceries, or even brewing coffee, AI-based systems can now handle the routine tasks of home management. These devices integrate seamlessly into everyday life, creating a smart ecosystem that allows blind individuals to live more independently. As AI continues to evolve, we can expect even more features designed to accommodate the unique needs of the blind community, from hands-free cooking to automated home security systems.

AI and Social Inclusion

AI’s impact on social inclusion is one of its most powerful aspects, helping blind individuals engage more fully in both online and offline communities. Social media has traditionally been a challenge for those with visual impairments, given its highly visual nature. However, AI has made significant strides in this area, particularly in generating alt-text descriptions for images. Facebook, Twitter, and Instagram use AI to create image descriptions, allowing blind users to engage with visual content more effectively. Whether it’s identifying people in a group photo or understanding the elements in a landscape shot, AI-generated alt-text enables fuller participation in social media.

AI tools are also improving face-to-face interactions. Applications like Be My AI can assist blind individuals in recognizing faces and interpreting social cues, such as facial expressions or gestures. This technology enhances confidence in social situations, allowing users to identify who they are talking to and respond accordingly. By bridging the gap in non-verbal communication, AI is helping to create more inclusive environments for blind individuals, whether in the workplace, social gatherings, or family settings.

In addition, voice-to-text applications powered by AI make communication smoother across various platforms. Blind individuals can dictate messages, send emails, or participate in group chats without needing to rely on visual interfaces. These tools promote seamless communication and foster a sense of belonging in both personal and professional settings. Whether it’s participating in a virtual meeting or sharing experiences in a group chat, AI ensures that blind individuals are fully included in the conversations that shape modern life.

Conclusion

The transformative impact of AI on the lives of blind individuals is undeniable. From helping them navigate complex urban environments to offering hands-free home management, AI is revolutionizing daily living. With tools like ChatGPT, Seeing AI, Be My AI, and Picture Smart AI, blind people now have unprecedented access to information and social inclusion. These technologies empower them to perform tasks independently that were once daunting or impossible, making the world more inclusive and of course, accessible.

As AI continues to evolve, its potential to improve accessibility grows. Future iterations of AI technology could allow blind individuals to perform even more complex tasks, such as understanding emotional nuances in conversations or providing real-time feedback on live events. The evolution of alt-text and image recognition, for example, could lead to AI providing more detailed descriptions, allowing blind users to fully engage with all kinds of visual media. The continued development of wearable AI devices will also allow for more seamless integration into daily life, providing greater autonomy in both personal and public settings.

Looking to the future, the possibilities are exciting. AI’s growing ability to interpret and describe complex visual and social environments could lead to even greater independence for blind people. We could see the development of AI that not only recognizes objects and people but also predicts needs and assists with even more nuanced tasks, such as providing live commentary at events or offering feedback during conversations. These advancements will make the world more navigable and inclusive, pushing the boundaries of what blind individuals can achieve.